Abstract

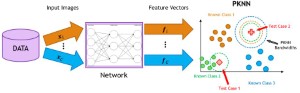

The focus of this article is extending classifiers from N classes to N+1 classes without retraining for tasks like explosive hazard detection (EHD) and automatic target recognition (ATR). In recent years, deep learning has become state-of-the-art across domains. However, algorithms like convolutional neural networks (CNNs) suffer from the assumption of a closed-world model. That is, once a model is learned, a new class cannot usually be added without changes in the architecture and retraining. Herein, we put forth a way to extend a number of deep learning algorithms while keeping their features in a locked state; i.e., features are not retrained for the new N+1 class. Different feature transformations, metrics, and classifiers are explored to assess the degree to which a new sample belongs to one of the N classes and a decision rule is used for classification. Whereas this extends a deep learner, it does not tell us if a network with locked features has the potential to be extended. Therefore, we put forth a new method based on visually assessing cluster tendency to assess the degree to which a deep learner can be extended (or not). Lastly, while we are primarily focused on tasks like aerial EHD and ATR, experiments herein are for benchmark community data sets for sake of reproducible research.