Doing More With Less: Similarity Neural Nets and Metrics for Small Class Imbalanced Data Sets

Proc. SPIE 11418, Detection and Sensing of Mines, Explosive Objects, and Obscured Targets XXV, 2020

Abstract

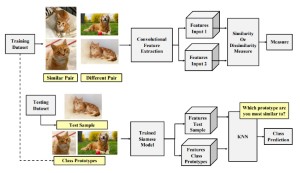

The focus of this article is deep learning on small, class imbalanced data sets in support of explosive hazard detection (EHD) and automatic target recognition (ATR). To this end, we explore artificial neural networks that are driven by similarity versus classification or regression. Similarity can be emphasized via network design, e.g., siamese networks, and/or underlying metric, e.g., contrastive or triple loss. The general goal of a similarity neural network (SNN) is discriminative training via focusing on similarity between tuples of like (and unlike) inputs. As such, SNNs have the potential to learn improved solutions on small data; aka "do more with less". Herein, we explore different avenues and we show that SNNs are essentially neural feature extractors followed by k-nearest neighbor classification. Instead of experimenting on a government data set that cannot be shared, we instead focus on benchmark community data sets for sake of reproducible research. Preliminary findings are encouraging as they suggest that SNNs have great potential for tasks like EHD and ATR.